GPT-4 faces a challenger: Can Writer’s finance-focused LLM take the lead in banking?

- We often focus on chatbots built by banks and financial firms, but today, we explore the engines driving chatbot interactions and platform automation.

- Banks typically turn to GPT-4 for LLM solutions, but a potential rival is emerging. San Francisco’s Writer, a gen AI company, is pushing forward in enterprise AI with domain-specific LLMs like Palmyra Fin.

Banks are heavily investing in Large Language Models (LLMs) to enhance both internal operations and customer interactions — yet building a model that excels at both is a significant challenge.

A recent study by Writer, a San Francisco-based generative AI company that provides a full-stack AI platform for enterprise use, found that ‘thinking’ LLMs like OpenAI’s o1 and DeepSeek R1 produce false information in up to 41% of tested cases.

The study evaluated advanced reasoning models in real-world financial scenarios, highlighting the risks such inaccuracies pose to regulated industries like financial services. The research also showed that traditional chat LLMs outperform thinking models in accuracy.

LLMs are used in three main ways within financial services:

- Platforms for operations & automation – LLMs power internal enterprise platforms to streamline workflows, automate document processing, summarize reports, analyze data, and assist employees. For example, Ally Bank’s proprietary AI platform, Ally.ai, uses LLMs to improve its marketing and business processes.

- Task-specific AI assistants – LLMs enhance specific financial tasks such as fraud detection, compliance monitoring, or investment analysis. An example of this is J.P. Morgan’s IndexGPT, which aims to provide AI-driven investment insights.

- Chatbots & virtual assistants – LLMs improve customer-facing chatbots by making them more conversational and executing basic tasks. Bank of America’s virtual assistant, Erica, provides banking insights to its customers.

We often focus on chatbots built by banks and financial firms, but today, we explore the underlying technology behind them — the engines driving chatbot interactions and platform automation.

We take a closer look at the LLMs driving these AI systems, their challenges, and how financial firms can train enterprise-grade models to capitalize on their potential while controlling their risks.

Thinking LLMs vs. traditional chat LLMs

Thinking LLMs, also referred to as CoT (Chain-of-Thought) models, are designed to simulate multi-step reasoning and decision-making processes to provide more nuanced responses beyond only retrieving or summarizing information, says Waseem Alshikh, CTO and co-founder of Writer.

“These models are not truly ‘thinking’ but are instead trained to generate outputs that resemble reasoning patterns or decompose complex problems into intermediate reasoning steps,” Waseem notes.

Traditional chat LLMs, however, tend to be more accurate, according to Waseem. These models mainly use pattern matching and next-token prediction, responding in a conversational manner based on pre-trained knowledge and contextual cues. While these models may struggle with complex queries at times, they produce fewer hallucinations, making them more reliable for regulatory compliance, according to Writer’s research.

Bank of America’s virtual assistant, Erica, uses a traditional chat model to assist customers with banking tasks like balance inquiries, bill payments, and credit report updates. By leveraging structured data and predefined algorithms, it provides accurate and reliable responses while reducing the likelihood of misinformation.

When handling complexities, these traditional chat-based LLMs can occasionally struggle with hallucinations, too.

Morgan Stanley’s AI Assistant, for example, uses OpenAI’s GPT-4 to scan 100,000+ research reports and provide quick insights to financial advisors. It enhances portfolio strategy recommendations by summarizing complex data beyond retrieving reports.

Morgan Stanley’s AI tool encountered accuracy issues stemming from hallucinated responses. Shortly after its launch in 2023, sources within the company described the tool as ‘spotty on accuracy,’ with users frequently receiving responses like “I’m unable to answer your question.”

While Morgan Stanley has been proactive in fine-tuning OpenAI’s GPT-4 model to assist its financial advisors, the company acknowledges the challenges posed by AI hallucinations. To reduce inaccuracies, the bank curated training data and limited prompts to business-related topics.

But how can financial firms navigate the trade-off between AI sophistication and accuracy?

Best practices for implementing thinking LLMs in financial services

Though thinking LLMs are more prone to hallucinations, their advanced capabilities make them difficult for financial firms to simply rule them out. With the right strategic approaches, they can be deployed effectively.

Waseem outlines the key steps:

- Pair thinking LLMs with retrieval-based AI to keep outputs accurate.

- Train models on finance-specific datasets with domain experts involved in evaluation. Prioritize context grounding over general knowledge generation.

- Narrow down the thinking LLMs’ use to internal operations instead of customer-facing advice.

- Apply human oversight for critical decisions.

Some financial firms are applying comparable measures in lower-stakes scenarios throughout various LLM frameworks and experiencing positive outcomes. For example:

- JPM’s guardrails for LLMs: J.P. Morgan’s AI for investment strategies leverages LLMs to analyze market trends, but human oversight remains essential for major financial decisions. Thinking LLMs are optimized to minimize speculation, relying on historical data patterns rather than generating outright predictions.

- Bloomberg’s specialized, domain-focused AI: Bloomberg trained a finance-specific LLM, BloombergGPT, instead of relying on general-purpose AI, to curb hallucinations by anchoring responses in market data. This helps lower the risk of misleading investment recommendations.

- Goldman Sachs limiting LLMs to internal use: Goldman Sachs uses LLMs for document summarization and compliance monitoring, but doesn’t let AI generate financial advice for clients.

Can Writer’s finance-focused LLM outperform OpenAI’s GPT-4?

Writer launched Palmyra Fin in 2024, a specialized LLM for finance-focused GenAI use cases.

Palmyra Fin reduces AI hallucinations through 3 key strategies:

- Domain-specific training: Palmyra Fin is fine-tuned on financial data, enhancing its accuracy and relevance in financial contexts.

- Graph-based Retrieval-Augmented Generation (RAG): Writer uses a graph-based RAG, improving the model’s ability to access and use accurate information. RAG is the process of finding the right data to answer a question and delivering it to the LLM. The LLM can then reason and generate an answer.

- Integrated AI guardrails: Writer’s platform includes AI guardrails that monitor and control the model’s outputs, ensuring they align with factual and compliant information.

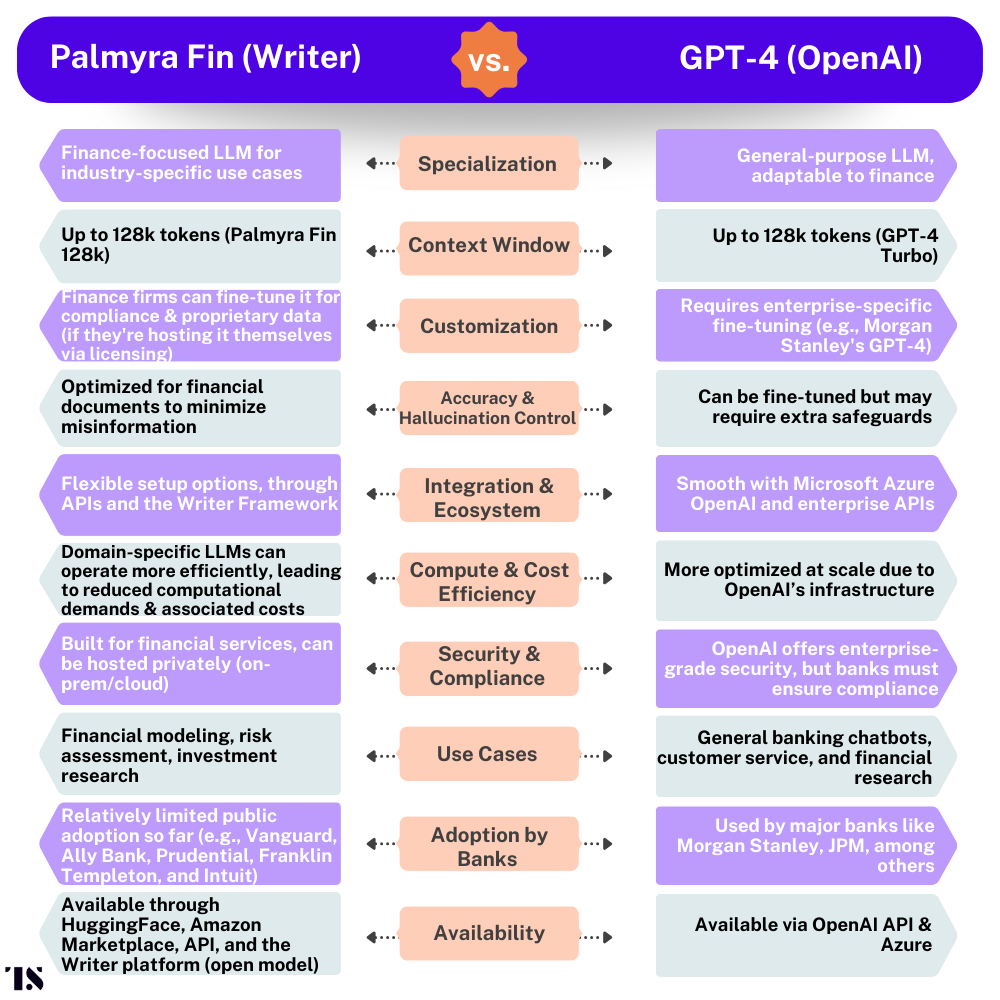

Writer competes with OpenAI in the LLM space – both provide models that can be integrated into chatbots and other financial applications. The key difference is that Writer develops both industry-specific models — such as those for finance — and broader models not tied to a particular sector, whereas, OpenAI offers general-purpose models like GPT-4, which companies adapt to their needs.

Writer’s apparent edge in financial services comes from its domain-specific approach, with models like Palmyra Fin.

“Domain-specific LLMs are integral to the AI landscape because of their precision. Yet the industry at large has struggled to produce specialized models that meet the accuracy and efficiency requirements enterprises need,” says Waseem.

Even so, most major banks still prefer GPT-4 for their LLM needs. The difference in scale between OpenAI and Palmyra Fin, which debuted last year, could be one reason – the chart below highlights additional considerations that could be influencing major financial firms’ decisions:

Palmyra Fin’s niche focus could eventually carve out a place in the financial LLM space, but gaining traction may take time. FIs are risk-averse and tend to favor models that have already been thoroughly tested across multiple industries, and have a solid track record of handling diverse queries.

Palmyra’s financial use case: Some financial firms are taking notice and have started to integrate Palmyra LLMs into their select operations. Ally Bank, the 23rd largest commercial US bank, is one of them.

“Palmyra LLMs have enabled leading financial institutions like Vanguard, Ally Bank, Prudential, and Franklin Templeton and innovative fintech companies like Intuit to power risk assessment, automated financial reporting, and AI-driven customer service,” notes Waseem.

Newest domain-specific model for finance: Most recently, Writer launched Palmyra Fin 128k. Similar to Palmyra Fin, Palmyra Fin 128k provides the underlying tech that can be integrated into different financial applications, including chatbots and other operational and platform tasks.

Its key distinguishing feature, however, is its extended context window. The “128k” denotes its context window, capable of processing up to 131,072 tokens in a single task or input session, allowing it to manage and analyze extensive financial documents, terminologies, and datasets.

“By leveraging Palmyra Fin 128k, FIs can develop advanced tools for tasks such as market analysis, risk assessment, and regulatory reporting, thereby streamlining processes and facilitating data-driven decision-making,” notes Waseem.